HERE Workspace & Marketplace 2.9 release

Highlights

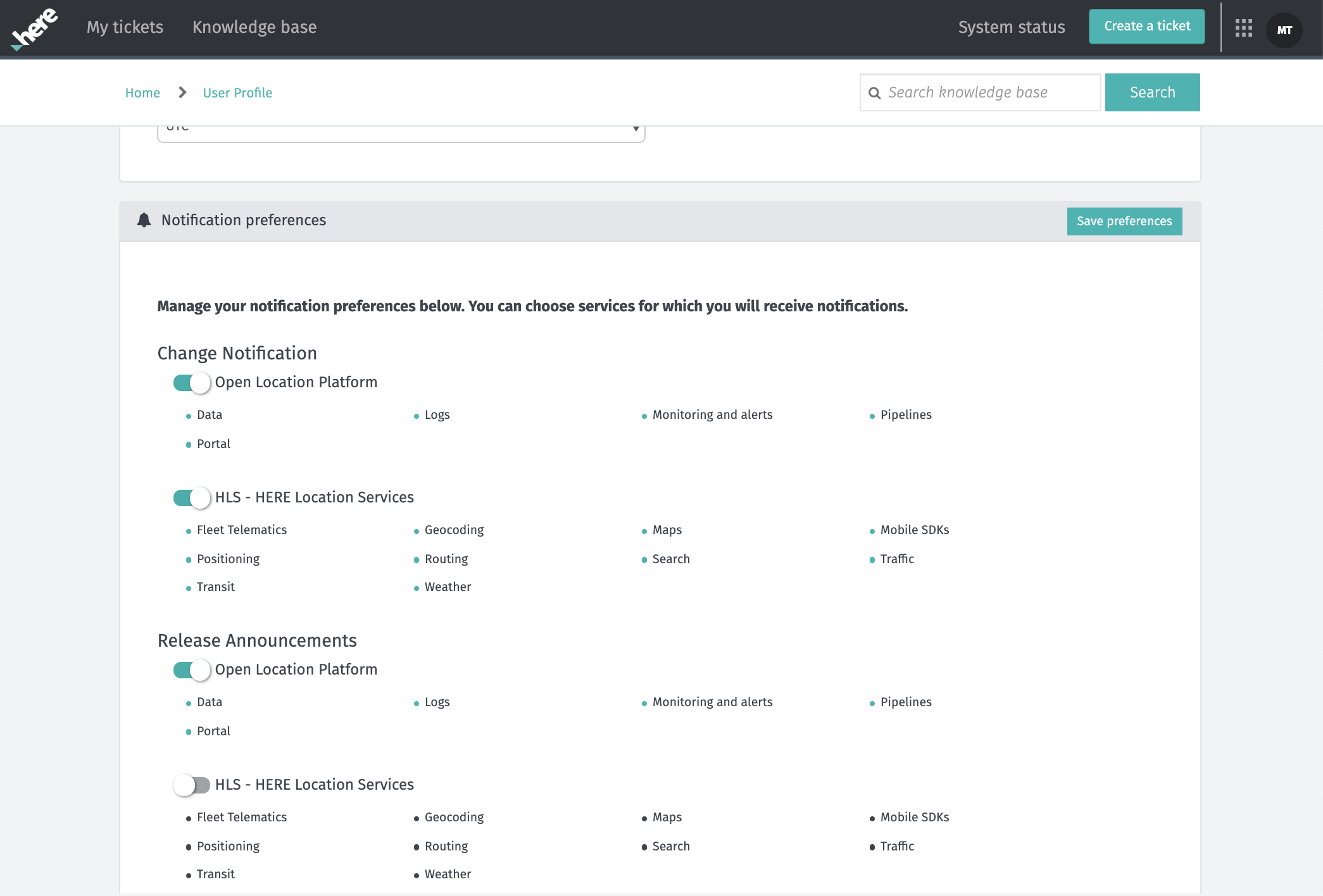

Manage Support and Product Update Email Subscriptions

Email opt in and opt out management is now available in the profile section of the support portal. This includes release announcements and change notifications for:

- HERE Open Location Platform Services

- HERE Location Services

Release announcements highlight the latest product features and change notifications provide updates about scheduled maintenance.

Note: The HERE support portal and subscription management are currently not available in China in conformance of regulatory rules.

Stream Pipeline Resource Improvement

Stream Pipeline Versions using Stream-2.x.x run-time environment can now be configured to use multiple task slots per Worker (Flink TaskManager). This functionality will improve the resource utilization of a memory-intensive Stream pipeline by allowing data caching and sharing within a Worker. It will also enable network connection sharing and reduce the network overhead. Running a stream pipeline with multiple task slots per Worker can considerably reduce its cost.

NOTE: The maximum number of task slots allowed per Worker is 1000.

Big Data Connectors

The Spark connector integrates OLP proprietary catalogs and layers with the industry-standard format of Spark (DataFrame), allowing you to leverage search, filter, map, and sort capabilities offered by the standard Apache Spark framework. In addition to previously released functions, the Spark connector now supports:

- Deletion of Versioned layer metadata (i.e. over-writing partition metadata so the data is not referenced by the latest Catalog version).

- All operations for Volatile layers: Read, Write and Delete (deletion of data and metadata).

With these updates, you can spend less time writing low-level pipeline/data integration code and more time on your use case-specific business logic.

Validate Data Integrity

This release provides capabilities to help ensure the integrity of your data in the Open Location Platform, helping meet ASIL and ISO 26262 standards compliance:

-

The ability to set a Digest or Checksum, a form of data integrity checking, as a layer configuration has been extended to Index layers so this capability is now possible across Versioned, Volatile and Index layers. Within the layer configuration, you can select one of the following industry standard functions: MD5, SHA1 or SHA-256. As long as this metadata is set in your layer configurations, your data consumers will be able to see that a Digest was used and which algorithm specifically so they can leverage it for downstream validation.

-

The ability to set a Cyclic Redundancy Check or CRC, an error detection mechanism, as a layer configuration for Versioned, Volatile and Index layers. Within the layer configuration, you can select CRC-32C (Castagnoli). Setting this layer configuration, your data consumers will understand a CRC was used and which algorithm specifically so they can leverage it for downstream validation. This CRC implementation helps support the ISO 26262 standard.

- As with all layer configurations, it is possible to set this metadata through all Data interfaces: API, Portal, Data Client Library and the CLI. Both configurations are optional and the default is "None". As a data producer, you calculate the digest and/or the CRC at the partition level according to your selected algorithm and write it to partition metadata. If you use the Data Client Library, it will perform these calculations for you based on the algorithm types you selected in the layer configuration.

What's in the works

Stay tuned for additional improvements to OLP that we're working on, including:

- Project-based administration for new resources - We are building upon our access management capabilities to introduce a new project-based permission model, enabling teams to easily administer collections of resources and realize automatic usage reporting with customer-defined projects.

- Time-based scheduling of Pipelines - Automate data processing by scheduling batch pipelines based on time, without the need for an external scheduling service.

- Spark UI for historical Batch Pipelines - Ensure business continuity by quickly troubleshooting historical Batch pipeline jobs using Spark UI.

-

Spatial query improvements to better support Index layer queries - We continue to work on Index layer query performance, especially for large data sets. That said, we will soon support queries submitted via any size bounding box, removing limits which impede such queries and result in error messages today.

- Big Data connectors - The Flink connector integrates OLP proprietary catalogs and layers with the industry standard format of Flink (TableSource), allowing you to leverage the full functionality of searching, filtering, mapping, sorting and more offered by the standard Apache Flink framework.

- The Flink connector will soon support the following additional operations, which means you can spend less time writing low level pipeline/data integration code and more time on your use case specific business logic:

- Versioned layer reads for metadata and data.

- Index layer reads and writes for metadata and data.

- Volatile layer reads and writes for metadata and data.

- The Flink connector will soon support the following additional operations, which means you can spend less time writing low level pipeline/data integration code and more time on your use case specific business logic:

- Data consumers can complete commercial subscription process online - When browsing available HERE commercial data in the Marketplace, data consumers can complete the subscription process by accepting the standard pricing and terms online. After successfully subscribed to the data, customers will receive subscription billing on their monthly invoice.

Changes, Additions and Known Issues

SDK for Java and Scala

To read about updates to the SDK for Java and Scala, please visit the SDK Release Notes.

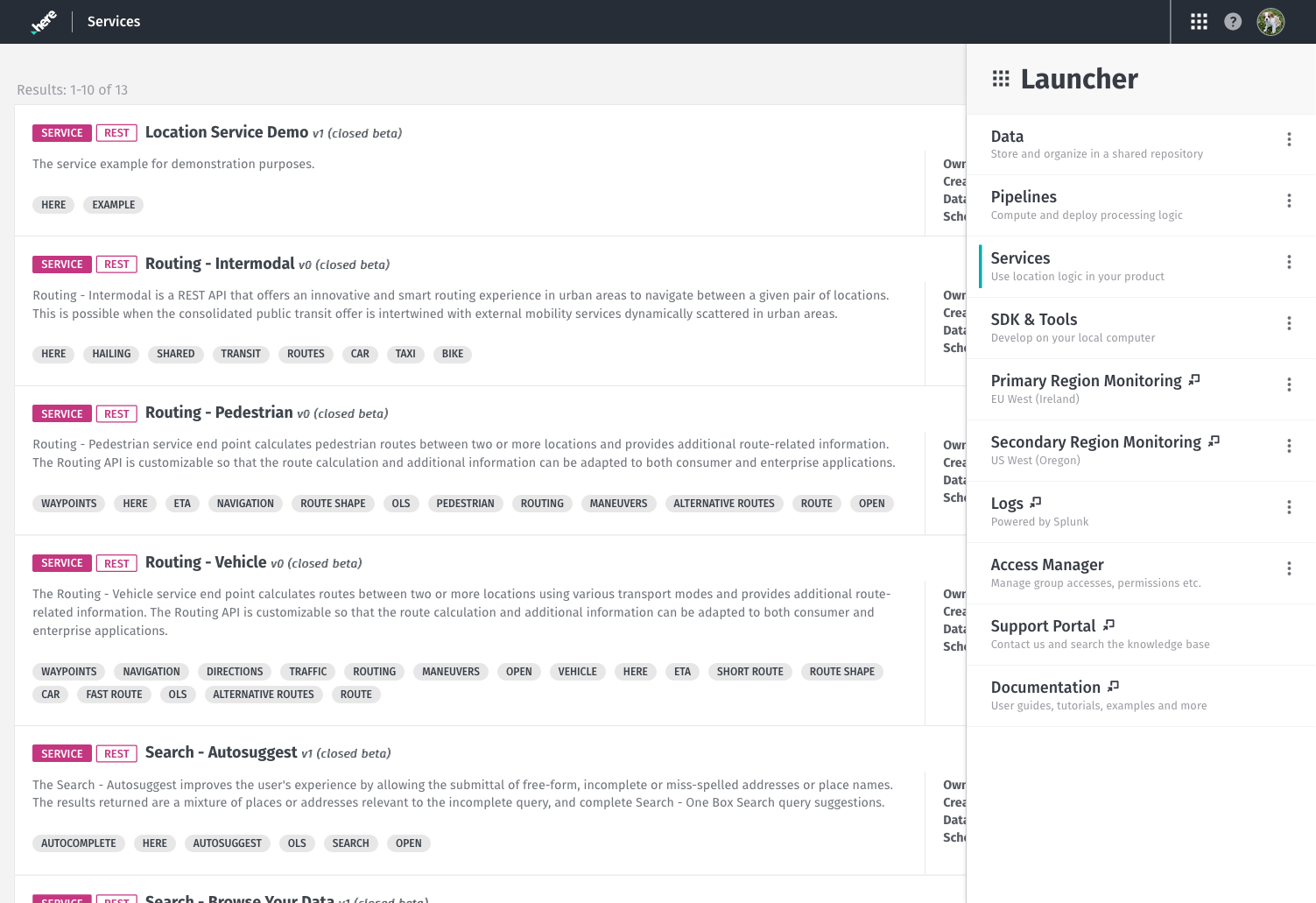

Web & Portal

Added: Links to the Support Portal and Documentation are now available in the Launcher. These links remain available in the support menu as well.

Issue: The custom run-time configuration for a Pipeline Version has a limit of 64 characters for the property name and 255 characters for the value.

Workaround: For the property name, you can define a shorter name in the configuration and map that to the actual, longer name within the pipeline code. For the property value, you must stay within the limitation.

Issue: Pipeline Templates can't be deleted from the Portal UI.

Workaround: Use the CLI or API to delete Pipeline Templates.

Issue: In the Portal, new jobs and operations are not automatically added to the list of jobs and operations for a pipeline version while the list is open for viewing.

Workaround: Refresh the Jobs and Operations pages to see the latest job or operation in the list.

Account & Permissions

Issue: A finite number of access tokens (~ 250) are available for each app or user. Depending on the number of resources included, this number may be smaller.

Workaround: Create a new app or user if you reach the limitation.

Issue: Only a finite number of permissions are allowed for each app or user in the system across all services. It will be reduced depending on the inclusion of resources and types of permissions.

Issue: All users and apps in a group are granted permissions to perform all actions on any pipeline associated with that group. There is no support for users or apps with limited permissions. For example, you cannot have a reduced role that can only view pipeline status, but not start and stop a pipeline.

Workaround: Limit the users in a pipeline's group to only those users who should have full control over the pipeline.

Issue: When updating permissions, it can take up to an hour for changes to take effect.

Data

Changed: OrgID is now added to Catalog HRNs to create greater isolation and protection between individual organizations. Eventually this change will also support the ability for Org Admins to manage all catalogs in their Orgs. With this release, Catalog HRNs updated to include Org information are listed in the OLP Portal and are returned by the Config API. Data Client Library versions higher than 0.1.394 will automatically and transparently deal with this change. Referencing catalogs by old HRN format without OrgID OR by CatalogID will continue to work for (6) months until May 29, 2020. After May 29, 2020, it is important to note that Data Client Library versions 0.1.394 and lower will stop working.

Note:

- Deployment of this change in China should follow within one week of RoW.

- Always use API Lookup in all workflows to retrieve catalog specific baseURL endpoints. Using API Lookup will mitigate impacts to your workflows from this change or any other changes in the future when baseURL endpoints change.

- With this change, API Lookup will return baseURLs with OrgID in HRNs for all APIs where previously API Lookup returned baseURLs with CatalogID or HRNs without OrgID.

- Directly using HRNs or legacy CatalogIDs or constructing baseURLs with them in an undocumented way will lead to broken workflows and will no longer be supported after May 29, 2020.

Fixed: an issue where partition list information normally available within the Layer/Partitions tab of the Portal UI was not displaying correctly for newer Volatile layers created after September 10, 2019. Published Volatile layer partitions are now displaying correctly within the Partitions tab of Volatile Layer pages.

Issue: The change released with OLP 2.9 to add OrgID to Catalog HRNs could impact any use case (CI/CD or other) where comparisons are performed between HRNs used by various workflow dependencies. For example, requests to compare HRNs that a pipeline is using vs what a Group, User or App has permissions to will result in errors if the comparison is expecting results to match the old HRN construct. With OLP 2.9, Data APIs will return only the new HRN construct which includes the OrgID (e.g. olp-here…) so a comparison between the old HRN and the new HRN will be unsuccessful.

Also: The resolveDependency and resolveCompatibleDependencies methods of the Location Library may stop working in some cases until this known issue is resolved.

Reading from and writing to Catalogs using old HRNs is not broken and will continue to work for (6) months.

Workaround: Update any workflows comparing HRNs to perform the comparison against the new HRN construct, including OrgID.

Issue: The Data Archiving Library does not yet support the latest CRC or Digest features released for Index layers. Using the Data Archiving Library, the CRC and Digest calculations should be automatically calculated if these layer configurations are selected in the Index layer BUT they are not. This issue will be corrected in the next OLP release.

Issue: Catalogs not associated with a Org are not visible in OLP.

Issue: Visualization of Index Layer data is not yet supported.

Issue: When you use the Data API or Data Library to create a Data Catalog or Layer, the app credentials used do not automatically enable the user who created those credentials to discover, read, write, manage, and share those catalogs and layers.

Workaround: After the catalog is created, use the app credentials to enable sharing with the user who created the app credentials. You can also share the catalog with other users, apps, and groups.

Pipelines

Issue: A pipeline failure or exception can sometimes take several minutes to respond.

Issue: Pipelines can still be activated after a catalog is deleted.

Workaround: The pipeline will fail when it starts running and will show an error message about the missing catalog. Re-check the missing catalog or use a different catalog.

Issue: If several pipelines are consuming data from the same stream layer and belong to the same Group (pipeline permissions are managed via a Group), then each of those pipelines will only receive a subset of the messages from the stream. This is because, by default, the pipelines share the same Application ID.

Workaround: Use the Data Client Library to configure your pipelines to consume from a single stream: If your pipelines/applications use the Direct Kafka connector, you can specify a Kafka Consumer Group ID per pipeline/application. If the Kafka consumer group IDs are unique, the pipelines/applications will be able to consume all the messages from the stream.

If your pipelines use the HTTP connector, we recommend you to create a new Group for each pipeline/application, each with its own Application ID.

Issue: The Pipeline Status Dashboard in Grafana can be edited by users. Any changes made by the user will be lost when updates are published in future releases because users will not be able to edit the dashboard in a future release.

Workaround: Duplicate the dashboard or create a new dashboard.

Issue: For Stream pipeline versions running with the high-availability mode, in a rare scenario, the selection of the primary Job Manager fails.

Workaround: Restart the stream pipeline.

Issue: When a paused Batch pipeline version is resumed from the Portal, the ability to change the execution mode is displayed but the change actually doesn't happen. This functionality is not supported yet and will be removed soon.

Issue: When a paused pipeline is resumed from the Portal, the ability to change the runtime credentials is displayed but the change actually doesn't happen. This will be fixed soon and you will be able to change the runtime credentials from the Portal while resuming a pipeline version.

Marketplace

Changed: Previously when you deactivated an active subscription, there was a 14-day grace period applied before the data access was disabled. This is now changed. You can deactivate an active subscription for a data consumer on the current date or select any future date up to 365 days in the future.

Issue: Users do not receive stream data usage metrics when reading or writing data from Kafka Direct.

Workaround: When writing data into a stream layer, you must use the ingest API to receive usage metrics. When reading data, you must use the Data Client Library, configured to use the HTTP connector type, to receive usage metrics and read data from a stream layer.

Issue: When the Technical Accounting component is busy, the server can lose usage metrics.

Workaround: If you suspect you are losing usage metrics, contact HERE technical support for assistance rerunning queries and validating data.

Notebooks

Deprecated: OLP Notebooks has been deprecated, please refer to the advantages and enhancements offered in the new OLP SDK for Python instead. Download your old notebooks and refer to the Zeppelin Notebooks Migration Guide for further instructions.

OLP SDK for Python

Issue: Currently, only MacOS and Linux distributions are supported.

Workaround: If you are using Windows OS, we recommend that you use a virtual machine.

Have your say

Sign up for our newsletter

Why sign up:

- Latest offers and discounts

- Tailored content delivered weekly

- Exclusive events

- One click to unsubscribe